Is vibe coding the risk or just poor architecture?

Is vibe coding with LLMs truly creating new security risks, or are most vulnerabilities the result of flawed logic and weak system design?

Large Language Models are rapidly becoming coding partners, a trend dubbed “vibe coding.” This intuition-driven development style, supercharged by AI, lets developers generate code from natural-language prompts with unprecedented speed

As vibe coding gains popularity, especially via tools like GitHub Copilot, Replit’s Ghostwriter, and Cursor

The Rise of “Vibe Coding” in Software Development

Vibe coding is essentially coding by prompt and intuition. Instead of meticulously planning or hand-writing every line, developers describe desired functionality to an AI and accept the suggested code, iterating until it “feels” right

This approach can indeed accelerate development. Studies have shown AI coding assistants help developers code 30–50% faster, reduce boilerplate, and even nudge improvements in docs and testing

However, the “move fast and break things” ethos behind vibe coding has raised eyebrows among security experts. The very features that make AI-assisted development attractive – speed, automation, and the ability for even junior coders to produce sophisticated code – can become a double-edged sword. As we’ll explore, vibe coding isn’t inherently dangerous, but it amplifies longstanding software engineering pitfalls: lack of rigor, inadequate review, and neglected security design

Security Concerns with AI-Generated Code

Early evidence suggests that LLMs often generate insecure code by default if not guided otherwise. In April 2025, Backslash Security researchers tested multiple popular LLMs (OpenAI GPT variants, Anthropic Claude, Google Gemini, etc.) by giving them “naïve” coding prompts (just asking for a feature with no security instructions). The result was alarming: all models produced code containing vulnerabilities in at least 4 of the OWASP Top 10 CWE categories, and some models (including GPT-4 variants) churned out insecure code in 9 out of 10 cases

“One of the big risks about vibe coding and AI-generated software is: what if it doesn’t do security? That’s what we’re all pretty concerned about.” – Michael Coates, former Twitter CISO

Real-world anecdotes echo this concern. In mid-2025, a DevSecOps engineer described reviewing a pull request written with GitHub Copilot and feeling her “stomach drop” – the code contained a textbook SQL injection bug (user input directly concatenated into a query)

Security leaders warn that vibe coding can encourage bad habits if teams aren’t careful. “Vibe-coding means a junior programmer can suddenly be inside a racecar, rather than a minivan,” quipped Brandon Evans of SANS Institute

Beyond code-level bugs, LLM-assisted development introduces new classes of risks in the software supply chain. A striking example is the “Rules File Backdoor” attack demonstrated by researchers in March 2025

Despite these concerns, most experts do not believe the use of LLMs is inherently evil or destined to create insecure software. Rather, the consensus is that security issues stem from how we use these tools. If developers treat vibe coding as a license to bypass secure development practices – skipping design reviews, threat modeling, and testing in the rush to ship – then the outcome will be insecure, AI or not. As Semgrep CEO Isaac Evans put it, “Vibe coding itself isn’t the problem — it’s how it interacts with developers’ incentives… Programmers often want to move fast, [and] speed through the security review process.”

Case Study: The Tea App Data Leak

In July 2025, a breach at a startup called Tea made headlines for its severity and irony. Tea is a women’s safety and dating-review app – yet a hacker exploit resulted in tens of thousands of users’ private photos and messages being exposed to the public

According to Tea’s official incident report and outside audits, the root cause was not AI at all, but a basic security lapse in the app’s architecture. Tea had stored user-uploaded images (including selfies and photo IDs used for account verification) in a legacy cloud storage bucket that was left unprotected – essentially an open database with no access controls

So where did the vibe coding theory come from? LLM-driven development wasn’t mainstream until 2023–2024, and indeed Tea’s team claimed the vulnerable storage system was “legacy code… from prior to Feb 2024”

The key lesson from Tea’s breach is timeless: if you ignore secure architecture and deployment practices, your application will be vulnerable – no matter how the code was produced. An internal post-mortem suggested that a basic threat modeling exercise could have prevented the disaster. Had the developers paused to ask “What could go wrong with storing user photos? How might an attacker abuse this?” – they would have immediately flagged “unauthorized public access to images” as a threat and required authentication and access control on the storage bucket as a mitigation

In short, Tea’s breach wasn’t caused by AI generating malicious code; it was caused by human oversight (or lack thereof) in system design. Yet, it serves as a warning for AI-assisted development all the same: if a single developer can now build and deploy an app faster than ever (thanks to AI), it’s all the more critical that security fundamentals – like protecting sensitive data stores – aren’t forgotten in the frenzy. As one journalist quipped, talking to an app is sometimes like “talking to a really gossipy coworker” – anything you tell it might get shared with the world

Case Study: Jack Dorsey’s BitChat

Another example highlighting the implementation vs. AI question is BitChat, the app mentioned earlier built by Twitter co-founder Jack Dorsey. Dorsey “vibe-coded” BitChat in July 2025 as an experiment, using AI (Block’s Goose agent) to autonomously write and debug the code for a Bluetooth mesh messaging client

Despite branding BitChat as a secure, private messaging platform, Dorsey had to admit the software had never undergone an external security audit

BitChat’s slip-up reinforces that AI will happily produce technically working code which may still violate security best practices or trust assumptions. The AI doesn’t inherently understand concepts like “don’t trust user input” or “assume the network is hostile” unless those requirements are codified in the prompt or training data. And if a solo developer (even one as high-profile as Dorsey) skips a rigorous review, these issues go live. The good news is that BitChat’s flaws were caught early in beta, prompting Dorsey to delay the full release until improvements were made

The pattern we see with Tea and BitChat is telling: both apps were built under a “code now, fix later” mentality; both had glaring security omissions (open storage, no identity verification) that are logic and design issues, not exotic bugs introduced by AI “misunderstanding” syntax. Yet the ease of rapid development with AI perhaps enabled those omissions to go unchecked until after launch. Vibe coding, if done irresponsibly, can create a false sense of security – an “illusion of productivity” where everything comes together quickly but the result is a fragile system with hidden cracks

Most Breaches are Born of Bad Logic, Not AI Malice

Looking at a broader set of 2024–2025 security incidents, most evidence supports the idea that flawed implementation logic and misconfigurations are the culprits, whether or not an AI was involved in coding. For instance, cloud security firm Wiz in 2025 investigated a severe authentication flaw in a platform (pseudonymously dubbed “Base44”) and traced it to a single faulty assumption in the code. The system allowed joining any customer’s tenant simply by knowing or guessing a public app_id – a single-parameter auth bypass that put potentially millions of users at risk

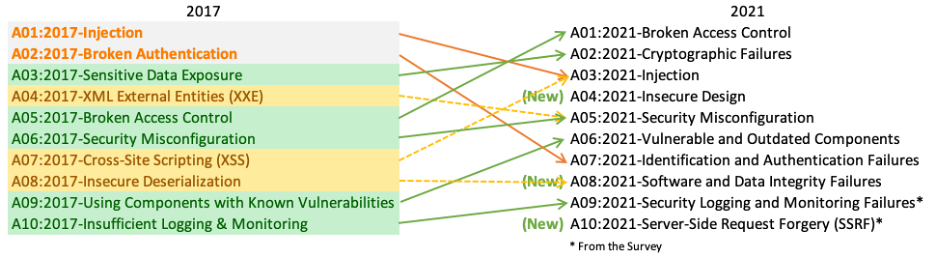

These examples highlight an important truth: the majority of security bugs we see – even in this AI era – are not novel “AI” bugs but the same old mistakes (broken access control, injection flaws, bad assumptions) appearing in new guises. LLMs can inadvertently reproduce these mistakes if they exist in training data or if the prompt doesn’t specify security requirements. But an LLM is not willfully introducing vulnerabilities; it has no concept of malice or ethics. The responsibility lies with developers and organizations to guide the AI, review its output, and architect systems securely from the start. As OWASP’s Secure-by-Design guidance emphasizes, building in security from the design phase is far more effective than patching later

Secure-by-Design for the “Vibe Coding” Era

LLMs are here to stay in software development, and outright shunning them is neither practical nor necessary. The focus instead should be on integrating security into AI-assisted workflows – effectively, vibe coding with guardrails. Experts refer to this balanced approach as moving from reckless “vibe coding” to “structured velocity” or “vibe securing,” where you still harness high-speed AI-driven development but within a robust security framework

-

Threat Modeling & Architectural Risk Analysis (Before Coding): Before a single line of AI-generated code is accepted, teams should conduct quick threat modeling sessions for new features. Ask the fundamental questions: “What am I building? What could go wrong? Who might attack this, and how would they do it?”

. Even a 30-minute brainstorm can reveal glaring needs – e.g. “We’re storing personal images; how do we prevent unauthorized access?” – and lead to concrete security requirements (like “images must be stored with access controls and encryption”) . Baking these requirements in at design time means when you prompt an AI or write code, you include those security constraints. LLMs actually handle explicit instructions well: Backslash’s study showed that adding “Make sure to follow OWASP secure coding best practices” to a prompt significantly improved the security of generated code (for many models, insecure outputs dropped from ~80% to ~20%) . In short, secure architecture + clear prompting can largely prevent the AI from falling into known traps. Threat modeling and risk analysis ensure you don’t overlook broader system issues (like open buckets or missing auth) in the rush to develop. - Secure Coding Standards and AI Guidance: Developers should treat AI like a junior engineer – powerful, fast, but needing guidance. Organizations can create secure coding guidelines for AI: e.g., always use prepared statements for database queries, handle user input with validation, implement logging and error handling in a safe manner, etc. These guidelines can be turned into prompt templates or rules fed into the AI agent. Some companies use “rule files” or policy frameworks to constrain AI suggestions (for instance, disallowing certain insecure functions or patterns). By instructing the LLM up front (or via an intermediary tool) to avoid insecure practices, you drastically reduce the chance of an unsafe snippet being produced. As an example, after the Copilot SQL injection incident above, a team could mandate that all database query generation by AI must use parameter binding. The AI, if properly guided, will then provide code that uses placeholders instead of string concatenation. Secure-by-default libraries and frameworks can also be leveraged – if the AI is asked to use a high-level API that inherently sanitizes inputs, the resulting code will be safer.

-

Human-in-the-Loop: Code Reviews and Pairing: Never let AI-generated code into production without human review. This point cannot be stressed enough. A “human firewall” of diligent code reviewers can catch the issues an AI (or a hurried dev) misses

. Organizations should enforce peer review for all code, especially AI-written segments . During review, the reviewer should explicitly consider security questions (perhaps using a checklist: e.g. “Are inputs validated? Are outputs encoded? Are auth checks in place? Could this logic be abused?” ). Many vulnerabilities are obvious once a fresh set of eyes looks – e.g., any experienced reviewer scanning Tea’s code would have immediately flagged “Hey, why are we not checking auth on this data fetch from storage?” . Instituting pair programming or at least rubber-duck debugging with AI can also help – for example, a developer can explain to a colleague (or even to the AI itself) what the code is supposed to do, which often exposes hidden assumptions or mistakes. The main idea is to not treat AI output as gospel; it must be vetted like any code written by an intern or outsider. -

Automated Security Testing (Shift-Left Tooling): Augment the human reviewers with tools: static application security testing (SAST), dynamic testing (DAST), dependency vulnerability scanners, and so on. These can be integrated into the CI/CD pipeline so that even AI-written code is subjected to automated scrutiny before merge or deployment. Modern SAST tools (some even AI-powered themselves) can flag common issues in code, and they should be tuned to recognize patterns that an AI might introduce. For instance, if an AI tends to produce some insecure default, a linting rule or SAST check can be added to catch that pattern. In 2025, researchers introduced the concept of an AI code security scanner that can intercept model outputs and check them for the Top 10 CWEs

. Such “AI guardian” tools could become standard – essentially AIs that fix or warn about the mistakes of the coding AIs. Regardless, the goal is to “shift left” security, embedding it early: don’t wait for a pen-test after release to discover an obvious bug that could have been caught by npm audit or a unit test. Remember that attackers are using AI too – they are crafting exploits and scanning for weaknesses at machine speed . Automated testing helps even the odds. -

Secure-by-Design Culture and Training: Perhaps most importantly, foster a culture where security is a core value, not an afterthought. CISA and industry groups in 2024 have been pushing the mantra of Secure by Design – meaning developers and product teams should make deliberate decisions to harden their software as it’s being built, rather than bolting on fixes later

. When using LLMs, developers should be educated on the common failure modes of AI-generated code. For example, they should know that LLMs might omit certain checks unless asked, or that an AI might not know the context of a secret and could hard-code it or log it by mistake. Teams could institute a practice of writing “abuse cases” alongside user stories (e.g., “User can upload photo” and an accompanying “Abuse case: attacker tries to retrieve someone else’s photo – what happens?”) . This trains developers (and management) to think adversarially and not get swept up in just the happy-path vibes. Leadership should set expectations that velocity is not just feature velocity, but secure velocity. One practical step is including security acceptance criteria in the Definition of Done for tasks – e.g., “No credentials in code, input validation implemented, sensitive actions audited, code reviewed by X” must be checked off before calling a feature complete . By defining up front that a story isn’t done until it meets security requirements, you counteract the tendency to say “it works, ship it” that vibe coding promotes .

Finally, organizations should empower developers to push back on unsafe demands. If an engineer feels an AI-generated module is too opaque or risky, they should have time to refactor it or consult a security engineer. It’s far better to delay a launch than to launch with a known vulnerability that could lead to a breach. The Tea and BitChat examples show that user trust (and company reputation) can be wiped out overnight by one security slip. As the saying goes, “If you don’t consciously choose security measures, you are unconsciously choosing to have none.”

Conclusion

The question of whether LLM-based development is inherently a security risk can now be answered: No, the mere use of an LLM doesn’t doom a project’s security – but using LLMs without proper software engineering discipline certainly does. Most security issues attributed to “vibe coding” turn out to be symptoms of deeper problems: insufficient design rigor, lack of threat modeling, skipping tests, and ignoring secure-by-design principles. An AI will happily write code at lightning speed, but it’s the developer’s responsibility to steer that process safely – much like driving a high-performance car requires skill and caution. When teams fail to do so, the resulting vulnerabilities are often indistinguishable from those in any hastily-written human code. As one security veteran observed after the Tea breach, “This wasn’t a hack by some AI; it was an unlocked front door.”

On the flip side, when used judiciously, LLMs can actually enhance security – by automating away trivial code (reducing human error), suggesting safer alternatives (if prompted), and even assisting in generating tests or documentation for security-critical parts. We’ve seen AI quickly adapt when explicitly instructed to “write secure code”

In summary, LLM-assisted development (“vibe coding”) is not an existential threat to software security – complacency is. The major breaches of 2024–2025 teach us that rushing to deploy without foundational security measures is the real hazard. Whether code comes from a human or a machine, the same secure development lifecycle must apply. By infusing strong security practices (threat modeling, secure design, code review, testing) into the vibe coding process, organizations can enjoy the best of both worlds: rapid innovation and resilient, safe software. The goal should be to channel the creative, high-speed energy of vibe coding into a “structured velocity” that doesn’t sacrifice quality or security

The bottom line for developers and security experts alike is clear: Keep coding with those good vibes – but always trust, then verify. Speed is thrilling, but security is grounding: it keeps our innovations standing long after the hype fades. With secure-by-design principles as our compass, we can confidently embrace AI as a coding partner, not a security adversary, and avoid turning “move fast and break things” into breaking our users’ trust.